Multi-scale, data-driven and anatomically constrained deep learning image registration for adult and fetal echocardiography

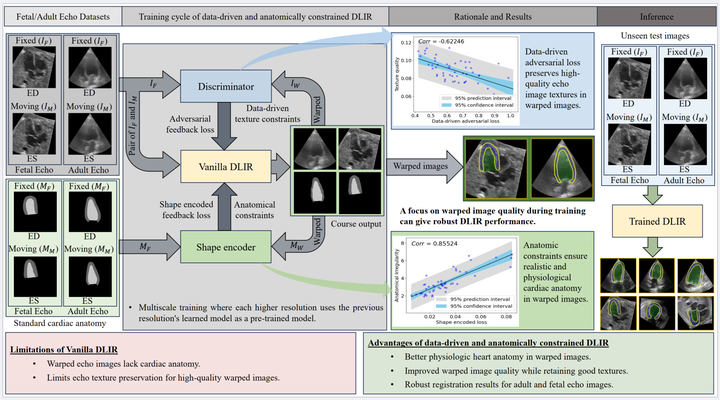

Proposed data-driven and anatomically constrained DLIR

Proposed data-driven and anatomically constrained DLIR

Abstract

Temporal echocardiography image registration is important for cardiac motion estimation, myocardial strain assessments, and stroke volume quantifications. Deep learning image registration is a promising way to achieve consistent and accurate registration results with low computational time. We propose that a greater focus on the warped image’s anatomic plausibility and image texture can support robust results and show that it has sufficient robustness to be applied to both fetal and adult echocardiography. Our proposed framework includes (1) an anatomic shape-encoded loss to preserve physiological myocardial and left ventricular anatomical topologies, (2) a data-driven loss to preserve good texture features, and (3) a multi-scale training of a data-driven and anatomically constrained algorithm to improve accuracy. Our experiments demonstrate a strong correlation between the shape-encoded loss and good anatomical topology and between the data-driven loss and image textures. They improve different aspects of registration results in a non-overlapping way. We demonstrate that these methods can successfully register both adult and fetal echocardiography using the public CAMUS adult dataset and our fetal dataset, despite the inherent differences between adult and fetal echocardiography. Our approach also outperforms traditional non-DL gold standard registration approaches, including optical flow and Elastix, and could be translated into more accurate and precise clinical quantification of cardiac ejection fraction, demonstrating potential for clinical utility.