Proposed framework

Proposed framework

Abstract

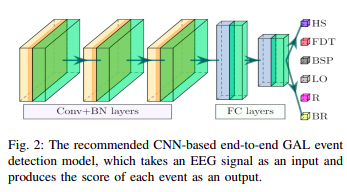

People undergoing neuromuscular dysfunctions and amputated limbs require automatic prosthetic appliances. In developing such prostheses, the precise detection of brain motor actions is imperative for the Grasp-and-Lift (GAL) tasks. Because of the low-cost and non-invasive essence of Electroencephalogra-phy (EEG), it is widely preferred for detecting motor actions while controlling prosthetic tools. This article has automated the hand movement activity viz GAL detection method from the 32-channel EEG signals. The proposed pipeline essentially combines preprocessing and end-to-end detection steps, eliminating the requirement of hand-crafted feature engineering. Preprocessing action consists of raw signal denoising, using either Discrete Wavelet Transform (DWT) or highpass or bandpass filtering and data standardization. The detection step consists of Convolutional Neural Network (CNN)- or Long Short Term Memory (LSTM)-based model. All the investigations utilize the publicly available WAY-EEG-GAL dataset, having six different GAL events. The best experiment reveals that the proposed framework achieves an average area under the ROC curve of 0.944, employing the DWT-based denoising filter, data standardization, and CNN-based detection model. The obtained outcome designates an excellent achievement of the introduced method in detecting GAL events from the EEG signals, turning it applicable to prosthetic appliances, brain-computer interfaces, robotic arms, etc.